Today’s trend is to add AI to everything, typically using a connection to the cloud, a complex SoC (such as in mobile products), or a GPU/FPGA. While the cloud has been transformative for many industries, production-grade AI still relies on cloud platforms to perform essential inference tasks. However, newer ASICs are changing this dynamic, and now designers can build systems to perform basic AI tasks on an end device. This means devices that include the proper hardware can perform AI inference, and even train directly on the end device without connecting to the cloud.

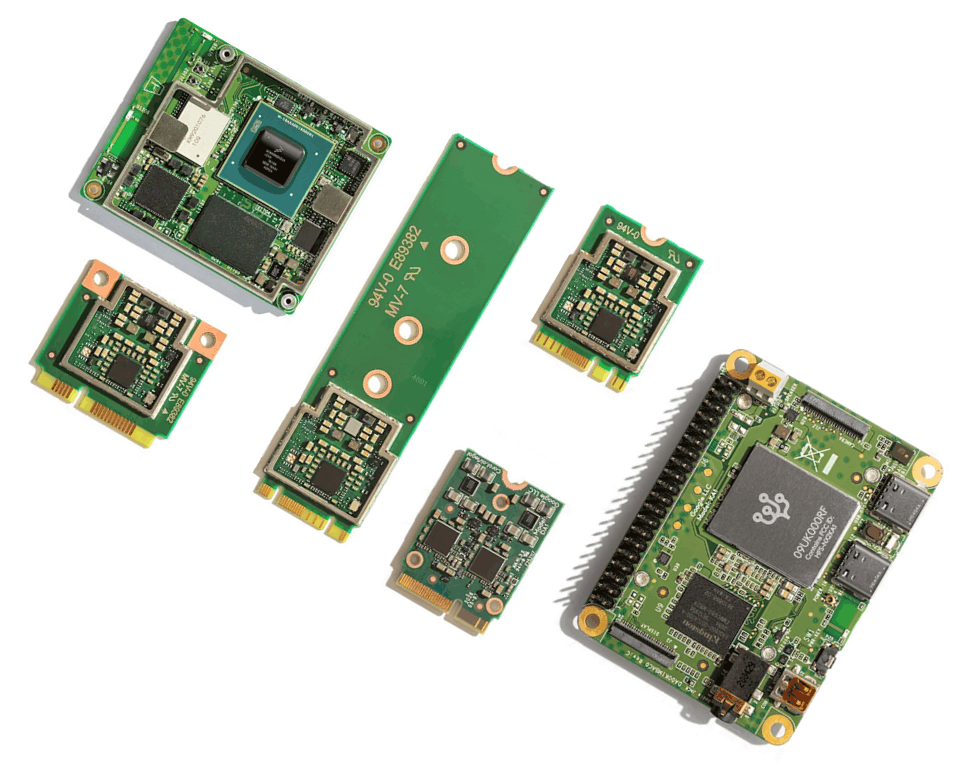

A Google Coral accelerator module is an SMD component that can be added to a board directly or implemented on add-in cards over standard connectors. The Coral product line includes a basic module and several accelerator boards that can be paired with a host processor, sensors, and other peripherals to implement a complete AI inference solution on a single device. Since 2019, the Coral product line has expanded to include several modules with different form factors and connector options, giving designers multiple ways to implement on-device AI.

About Google Coral

As AI engineers realized the sequential logic used in conventional processors was insufficient for production-grade AI inference, semiconductor companies responded with three possible solutions:

- Parallelization in high-compute GPUs, specifically in a data center environment.

- Implementation of tensorial processing in FPGAs.

- Complete redesigns of logic blocks specifically for implementing AI computation.

The Google Coral product line follows the 3rd path in this list, where the chip is specifically designed to implement tensor calculations used in inference and training operations in AI-capable systems. The Google Coral product line also includes several pre-built modules that connect to a base board with board-to-board connectors. This product line gives designers several options for adding AI capabilities into a new system.

Google Coral Accelerator Module

All Google Coral accelerator modules are built around the Coral Edge TPU (tensor processing unit). This small ASIC is designed to receive a serial data stream over a high-speed interface, use the input data for inference in an AI model, and provide output over the same interface back to a host processor. The host processor then uses the output in standard logical operations. The Coral Edge TPU is the main processor built into a Coral accelerator module, the latter of which is a small SMD multi-chip module, as shown below.

Google Coral accelerator module package and footprint

The Edge TPU built into the module is intended for lightweight inference operations involving images, low-frame rate video, or digital data streams from peripherals. This product is not intended for training with large datasets; this is best done in the cloud with updated models pushed to the device over the air or with manual flashing. Here are some of the core specifications of the Google Coral accelerator module:

| Input voltage | Main power: 3.3 V

Digital I/O: 1.8 V |

| Data interface | USB 2.0 or PCIe Gen2 |

| Peak compute | 4 TOPS (8-bit integer data) |

| Power consumption | 2 TOPS per watt |

| Footprint | SMD (Non-standard LGA) |

| Language support | Runs TensorFlow Lite models, support for Python implementations |

As is standard for many processors, designers must implement multiple core logic levels to operate the device. At peak operating compute, designers can expect 8 W power dissipation; with typical package-to-ambient thermal resistance values as high as 20 °C/W for this type of component, peak operation can produce temperature rises as high as 160 °C. Therefore, proper cooling measures must be implemented to ensure the temperature is kept in check.

Accelerator Boards

The Google Coral product line includes several modular boards connecting to a base board over standard connectors. These products offer a simple way to begin developing an application for a Google Coral accelerator module. The available boards include:

- Mini PCIe Accelerator

- M.2 Accelerator A+E key

- M.2 Accelerator B+M key

- M.2 Accelerator E key with dual TPU

- System-on-Module (SoM) (supports Python and C++)

More information can be found on the Google Coral products page.

Google Coral Mini PCIe Accelerator module

The base board used with a Google Coral accelerator module or one of the above boards will need to include all peripherals, interfaces, and power regulations required for the specific product. The base board will also need to match the module connector pinout and input voltage for the above modular boards. Whether the design intent is to use a module or one of the above expansion cards, the base board design will need to include a processor that supports the system’s embedded application.

Pairing With a Processor

The main requirement for accessing the TPU on a Coral module is to select a processor with, at minimum, a USB 2.0 operating interface. This puts some popular MCUs in the running as a host processor; the MSP430 or a similar MCU is a good choice for a processor with modest compute. Tutorials are also available for implementation on ESP32 and STM32.

Before you start developing an application for your Coral TPU, look at the Google Coral GitHub page for the required libraries. Inference operations on a Coral TPU are based on the TensorFlow Lite C++ API and the Coral C++ library (libcoral), which requires some additional code from the Edge TPU Runtime library (libedgetpu). Google has also created the PyCoral library, providing support for MicroPython implementations.

When you need to find footprints and sourcing data for a Google Coral accelerator module or other components, use the complete set of search features in Ultra Librarian. The platform gives you access to PCB footprints, technical data, and ECAD/MCAD models alongside sourcing information to help you stay ahead of supply chain volatility. All ECAD data you’ll find on Ultra Librarian is compatible with popular ECAD applications and is verified by component manufacturers.

Working with Ultra Librarian sets up your team for success to ensure streamlined and error-free design, production, and sourcing. Register today for free.